Reconstruction Results

Reconstruction of challenging unseen subjects with diverse appearance, geometry, and accessories. Surprisingly, our approach even generalizes to multiple persons thanks to the strong prior of 2D foundational models.

Reconstruction of unseen subjects from UBC Fashion dataset:

Reconstruction of unseen subjects from IIIT-3Dhuman dataset:

Reconstruction of unseen subjects from Sizer dataset:

Reconstruction of 'the Rock' Dwayne Johnson (left) and Taylor Swift (right) collected from the internet:

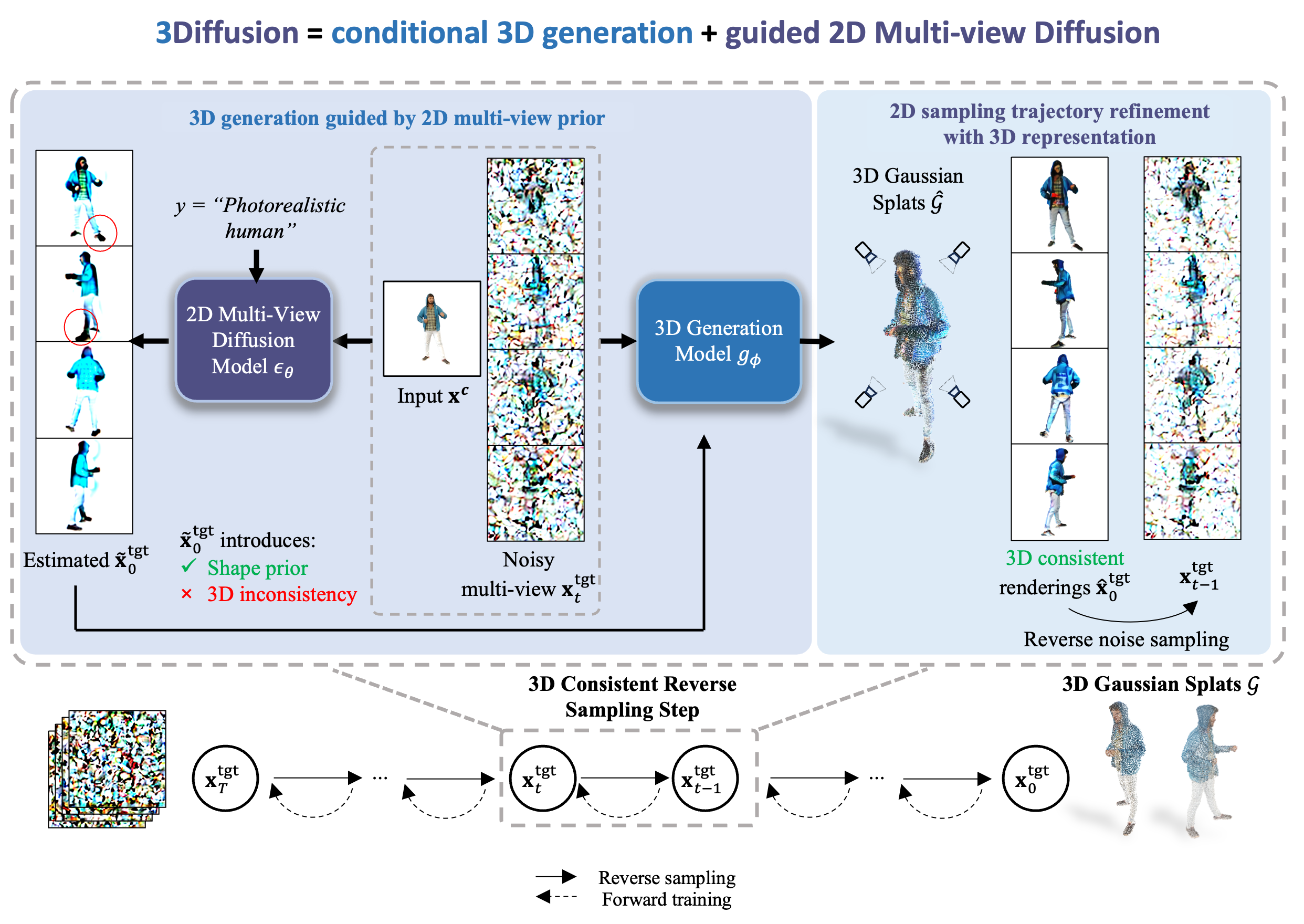

Generative Power in Reconstruction: We formulate the single image reconstruction problem as a conditional generative problem. In other word, we learn the conditional distribution of 3D representations given a single image. Hence, we sampled from distribution to reconstruct 3D, ensures a diverse but clear occluded region of the subject.