|

Yuxuan Xue

Hi There! I am a Ph.D. student in the Real Virtual Human group at University of Tuebingen , supervised by Prof. Dr. Gerard Pons-Moll. I affiliate with International Max-Planck Research School for Intelligent Systems (IMPRS-IS).

My research interests include 3D/4D photorealistic reconstruction, generative models, and understanding of human appearance and behavior.

Prior to that, I spent wonderful time in Max-Planck-Institute for Intelligent Systems. I graduated with the double Master degree in Mechanical Engineering as well as Robotics both with distinction from the Technical University of Munich (TUM) in 2022. I received the Bachelor degree in Mechanical Engineering from the same University in 2020.

Email /

Google Scholar /

LinkedIn /

CV /

Twitter /

Github

|

|

|

News & Award

|

[2025-08] Our Papers InfiniHuman and PhySIC are accepted to ACM SIGGRAPH Asia 2025.

[2025-06] Started my internship at  as a Research Scientist Intern with Javier Romero. as a Research Scientist Intern with Javier Romero.

[2025-11] Our Paper ControlEvents is accepted to WACV 2026.

[2024-11] Our Paper Gen-3Diffusion is accepted to T-PAMI 2025.

[2024-09] Our Paper Human 3Diffusion is accepted to NeurIPS 2024.

[2024-07] Awarded $5000 from OpenAI Research Access Program .

[2024-02] Our Paper E-LnR is accepted to IJCV 2024 (Vol.132).

[2024-01] Our Paper BOFT is accepted to ICLR 2024.

[2023-09] Our Paper OFT is accepted to NeurIPS 2023.

[2023-07] Our Paper NSF is accepted to ICCV 2023.

[2022-11] I am honored to receive the Best Student Paper Award from the BMVC 2022.

[2022-06] I received my M.Sc degree from TUM with distinction.

|

|

|

InfiniHuman: Infinite 3D Human Creation with Precise Control

Yuxuan Xue,

Xianghui Xie,

Margaret Kostyrko,

Gerard Pons-Moll

ACM SIGGRAPH Asia 2025, HongKong

BibTex

/

Arxiv

/

Website

/

Code

We propose a new generative model which generates photorealistic 3D avatars from text description with precise control in body shape and wearing clothing.

Our key idea is that the foundation models already understand human appearance and we distill 3D information from them, resulting a datasets with 111K diverse subjects.

|

|

|

PhySIC: Physically Plausible 3D Human-Scene Interaction and Contact from a Single Image

Yuxuan Xue*,

Pradyumna Yalandur-Muralidhar*,

Xianghui Xie,

Margaret Kostyrko,

Gerard Pons-Moll

ACM SIGGRAPH Asia 2025, HongKong

BibTex

/

Arxiv

/

Website

/

Code

We propose a general reconstruction framework for recovering holistic human and environment from monocular images. Leveraging to foundational depth and pose estimation models, we optimize towards physical constraints and result in physically plausible 3D human-scene interaction and contact.

|

|

|

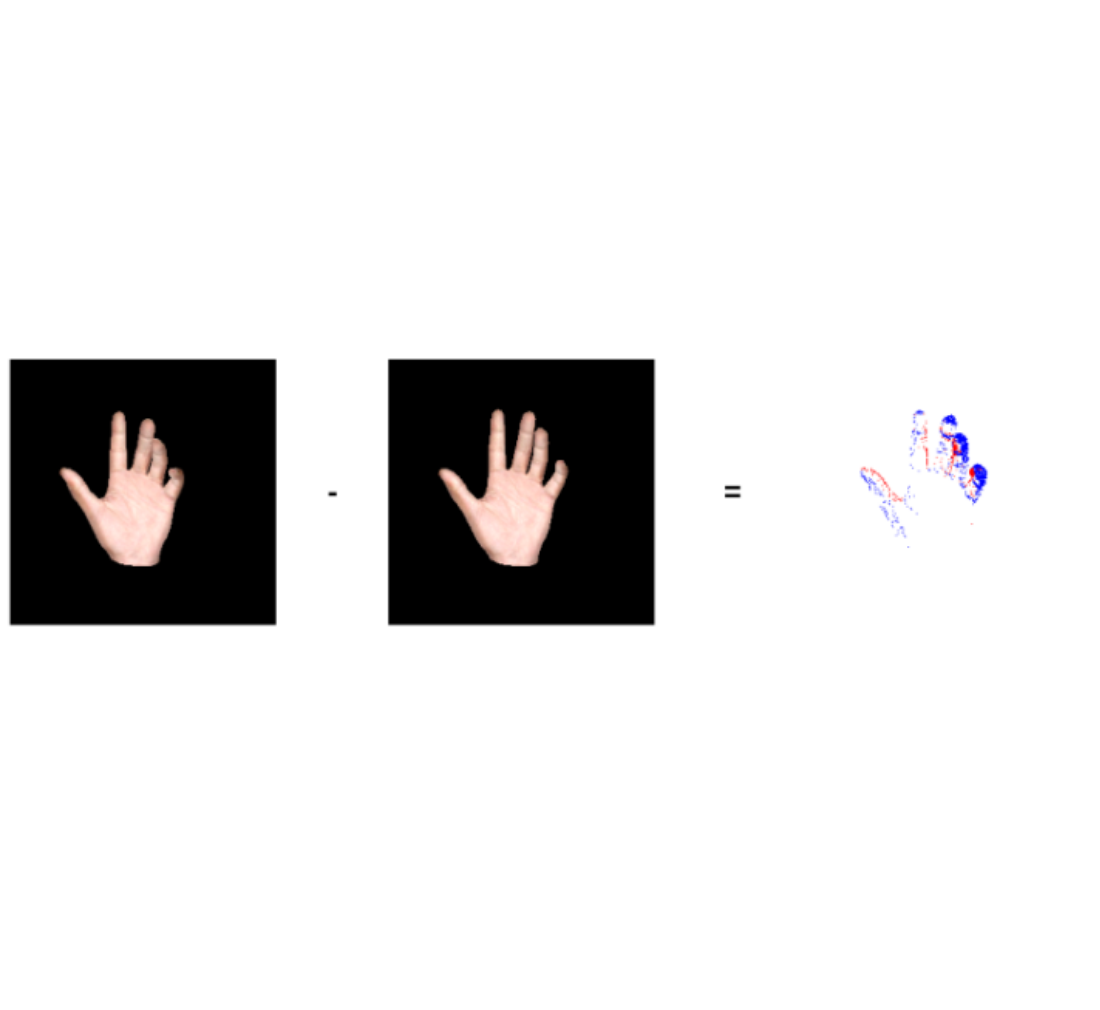

Gen-3Diffusion: Realistic Image-to-3D Generation via 2D & 3D Diffusion Synergy

Yuxuan Xue,

Xianghui Xie,

Riccardo Marin,

Gerard Pons-Moll

Transactions on Pattern Analysis and Machine Intelligence 2025

BibTex

/

Arxiv

/

Website

/

Code

We extend the idea of Human-3Diffusion to general objects. Our Gen-3Diffusion reconstructs high-fidelity 3D representation from single RGB Image within 22 seconds and 11 GB GPU memory, which allows an efficient large-scale 3D generation.

|

|

|

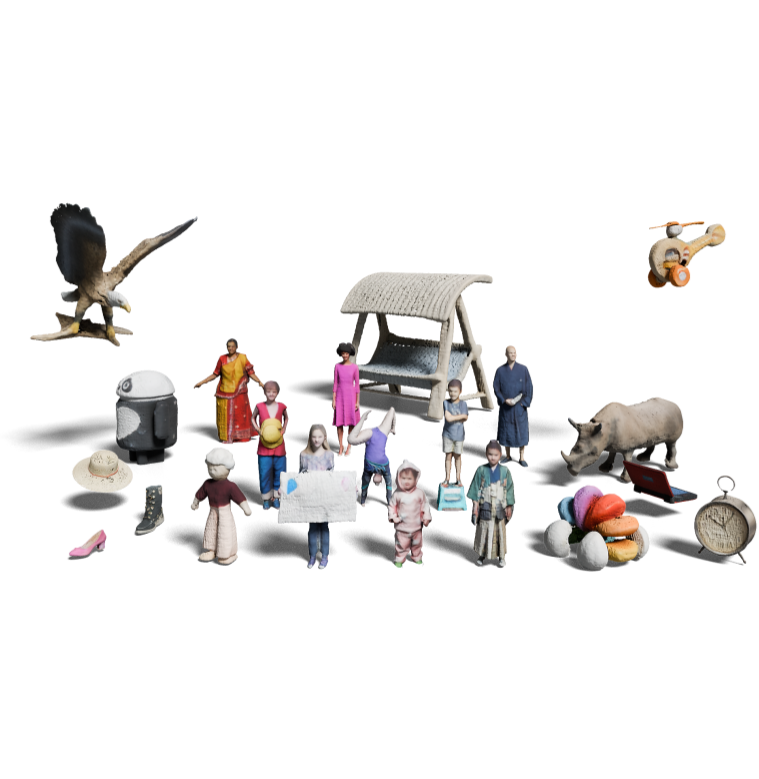

Human-3Diffusion: Realistic Avatar Creation via Explicit 3D Consistent Diffusion Models

Yuxuan Xue,

Xianghui Xie,

Riccardo Marin,

Gerard Pons-Moll

NeurIPS 2024, Vancouver

BibTex

/

Arxiv

/

Website

/

Code

We propose a new approach to reconstruct high-fidelity avatar in 3D Gaussian Splats from single RGB Image.

Our approach improves 2D multi-view diffusion process by using reconstructed 3D representation to guarantee 3D consistency at reverse sampling steps.

|

|

|

ControlEvents: Controllable Synthesis of Event Camera Data with Foundational Prior from Image Diffusion Models

Yuxuan Xue*,

Yixuan Hu*,

Simon Klenk,

Daniel Cremers,

Gerard Pons-Moll

WACV 2026, Tucson

BibTex

/

Arxiv

/

Website

/

Code

We propose ControlEvents, an event generative models which synthesize realistic event camera data with given conditions such as text, 2D skeleton, and 3D motion. The generated dataset can be used to enhance event-based computer vision tasks such as classification or pose recovery.

|

|

|

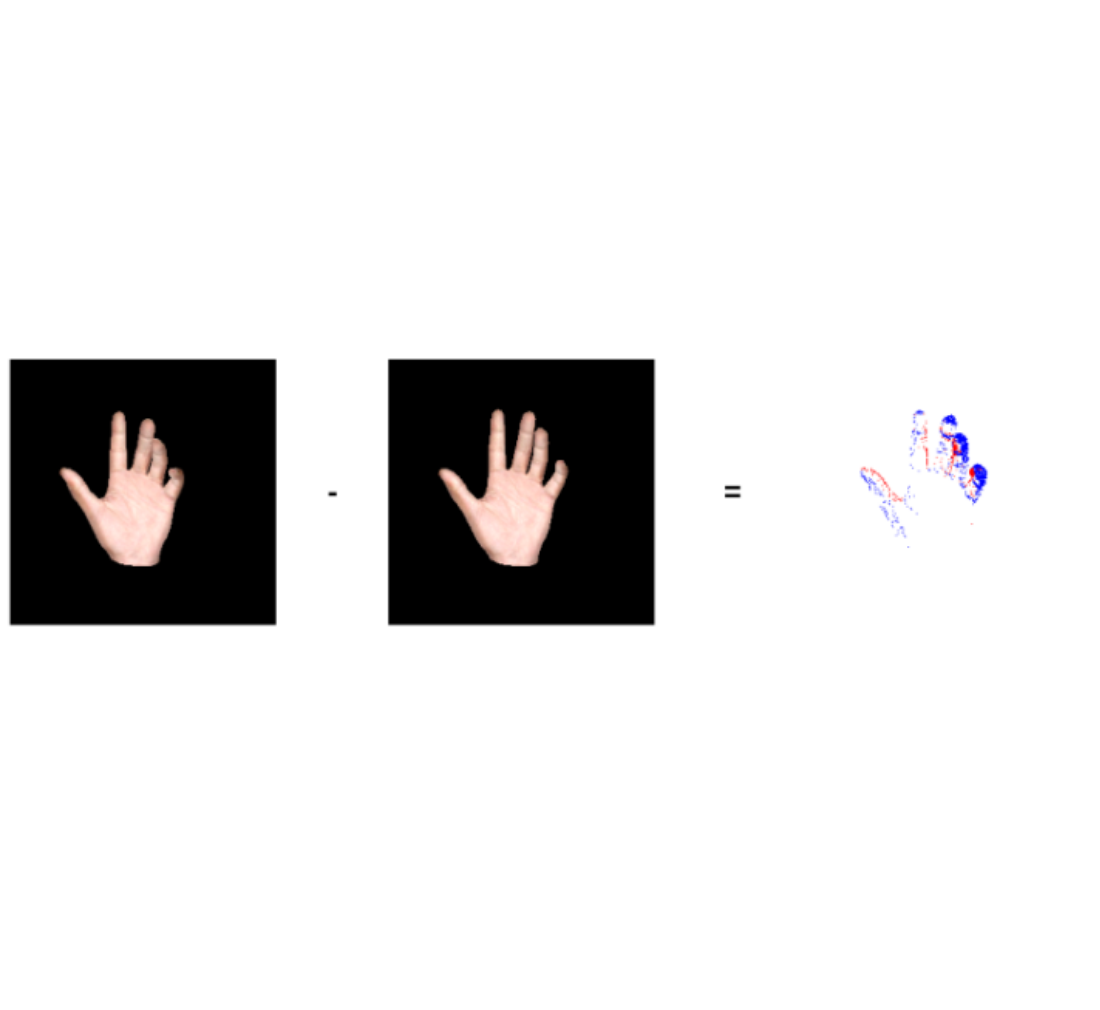

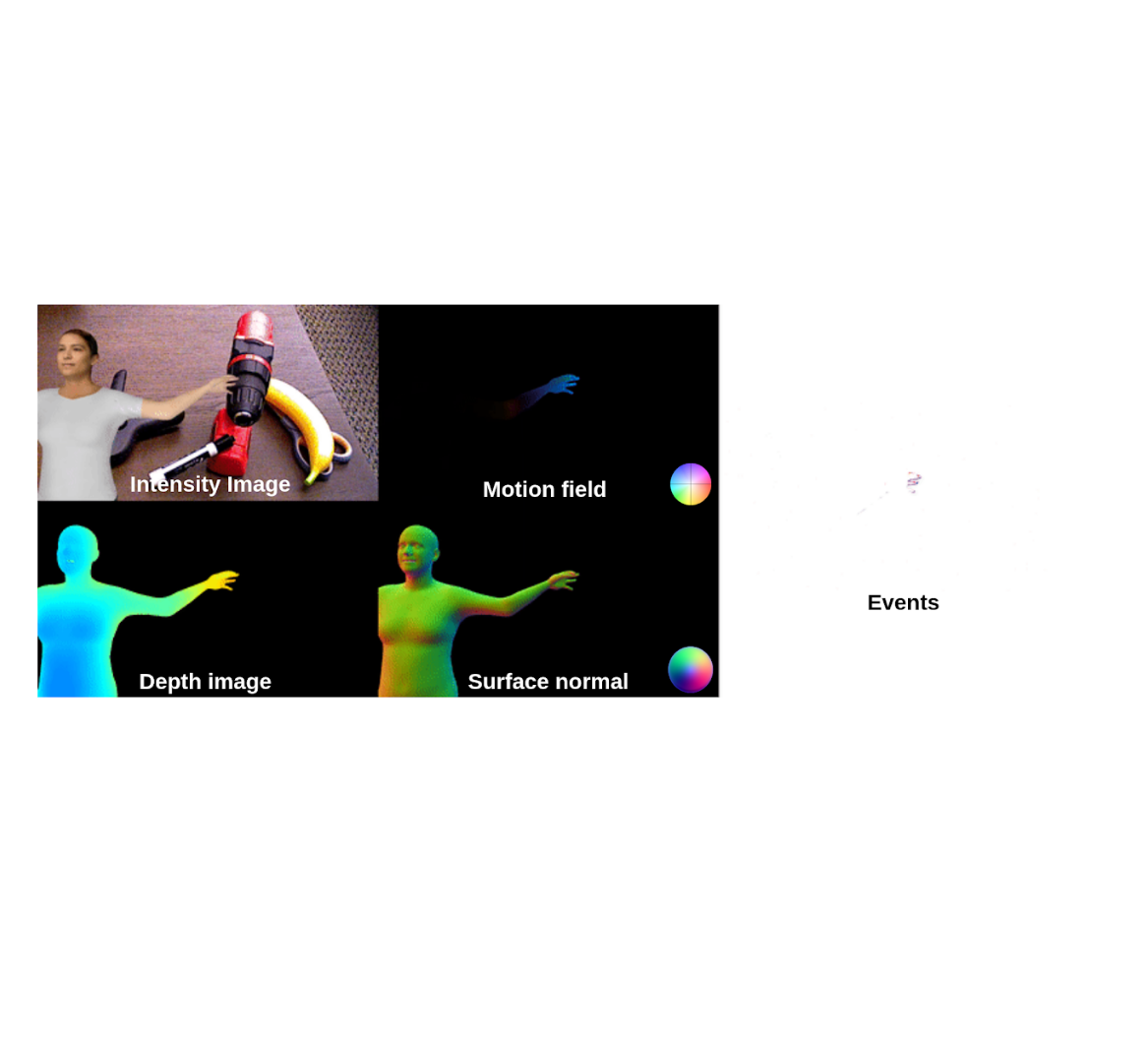

E-LnR: Event-Based Non-rigid Reconstruction of Low-Rank Parametrized Deformations from Contours

Yuxuan Xue,

Haolong Li,

Stefan Leutenegger,

Jörg Stückler.

IJCV Volume 132, pages 2943–2961

BibTex

/

Website

We propose E-LnR, an event-based approach which reconstruct non-rigid deformation in the low rank parametrized space. This is a journal extension of our BMVC 2022 paper.

|

|

|

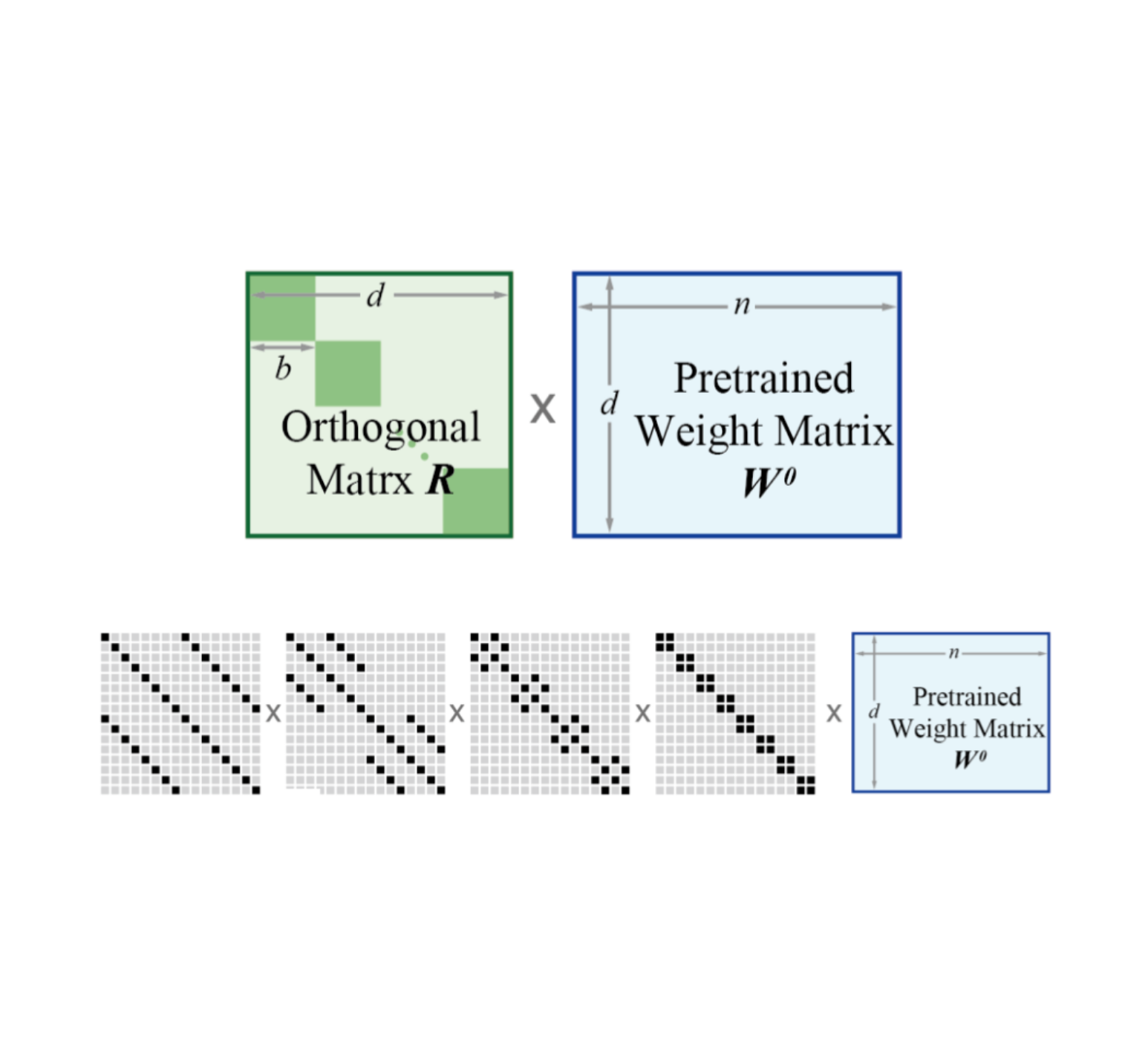

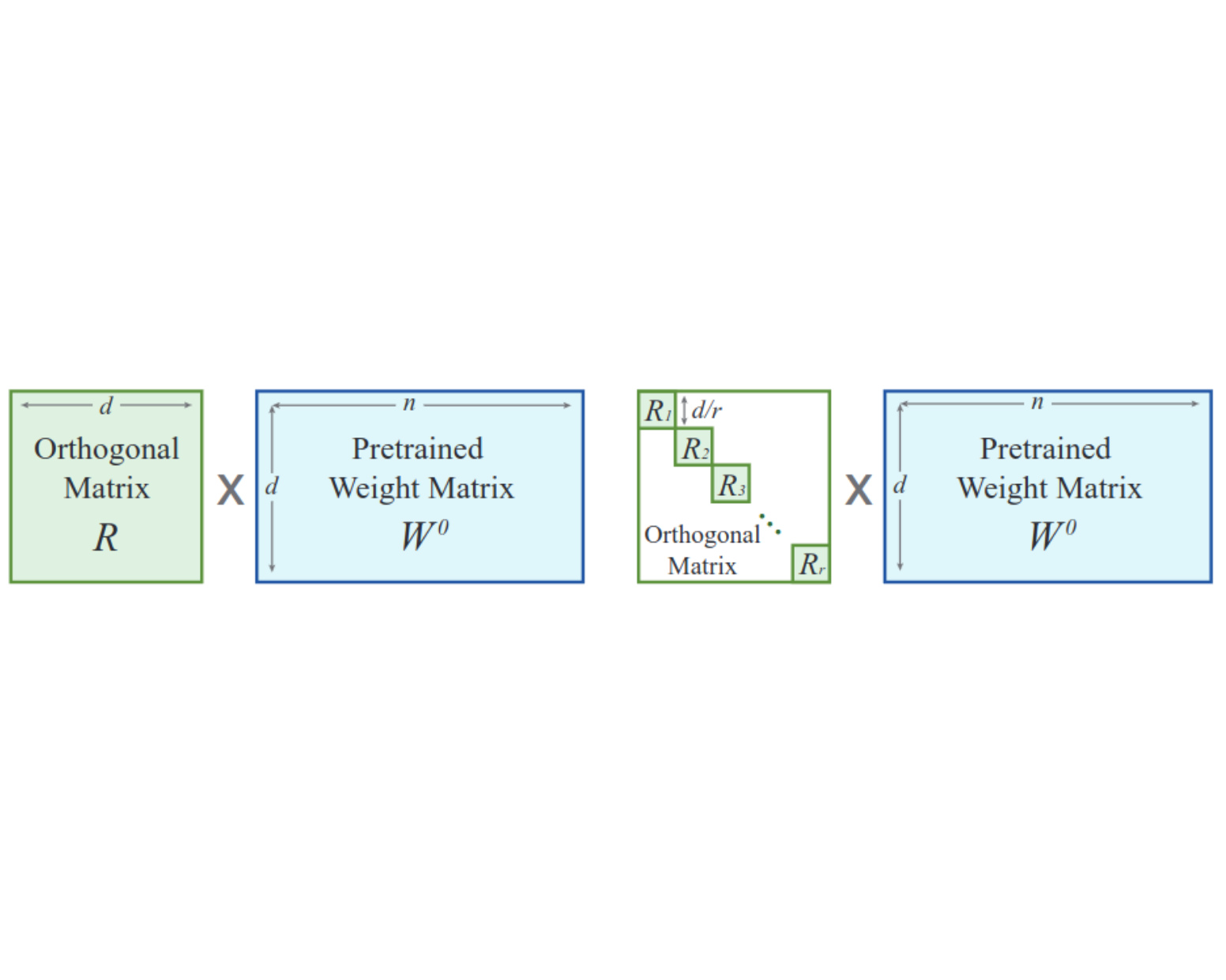

Parameter-Efficient Orthogonal Finetuning via Butterfly Factorization

Weiyang Liu*,

Zeju Qiu*,

Yao Feng**,

Yuliang Xiu**,

Yuxuan Xue**,

Longhui Yu**,

Haiwen Feng,

Zhen Liu,

Juyeon Heo,

Songyou Peng,

Yandong Wen,

Michael J. Black,,

Adrian Weller,

Bernhard Schölkopf.

ICLR 2024, Vienne

BibTex

/

Arxiv

/

Website

/

Code

We propose BOFT (Orthogonal Butterfly), a general orthogonal finetuning technique with butterfly factorization that effectively adapts foundation models to different tasks such as Vision, NLP, Math QA, and Controllable Generation.

|

|

|

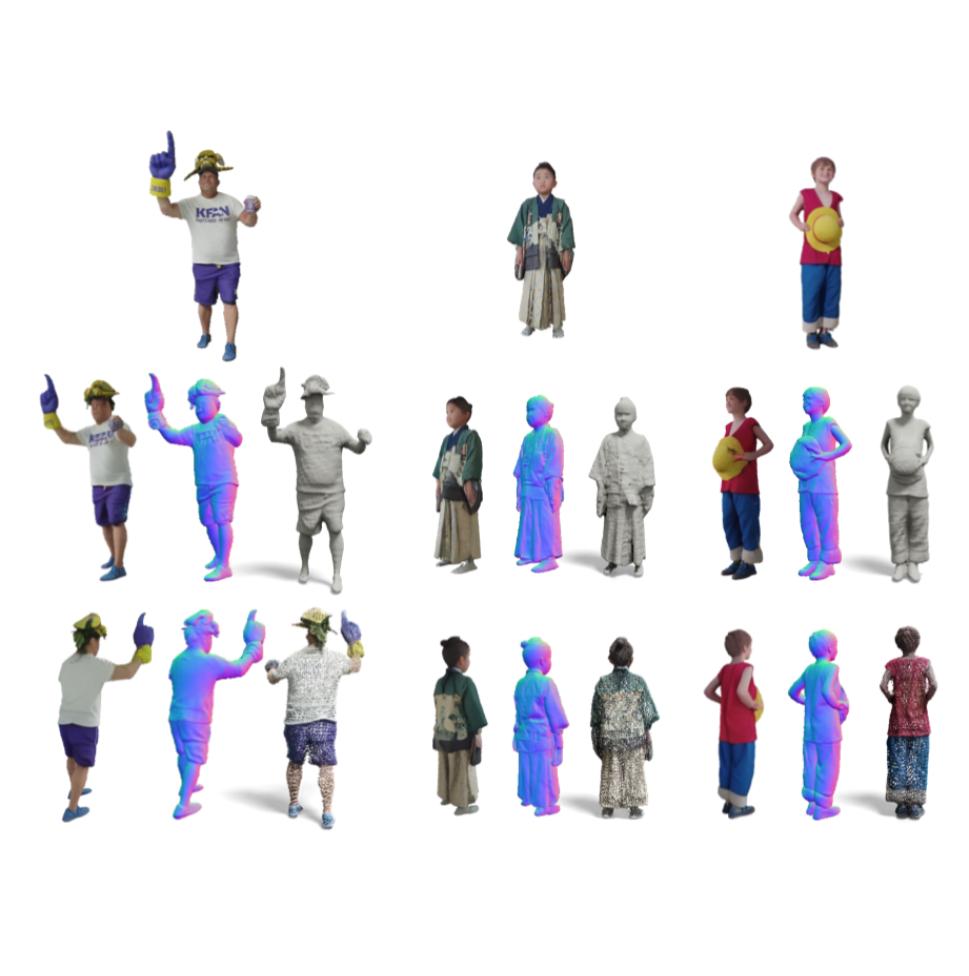

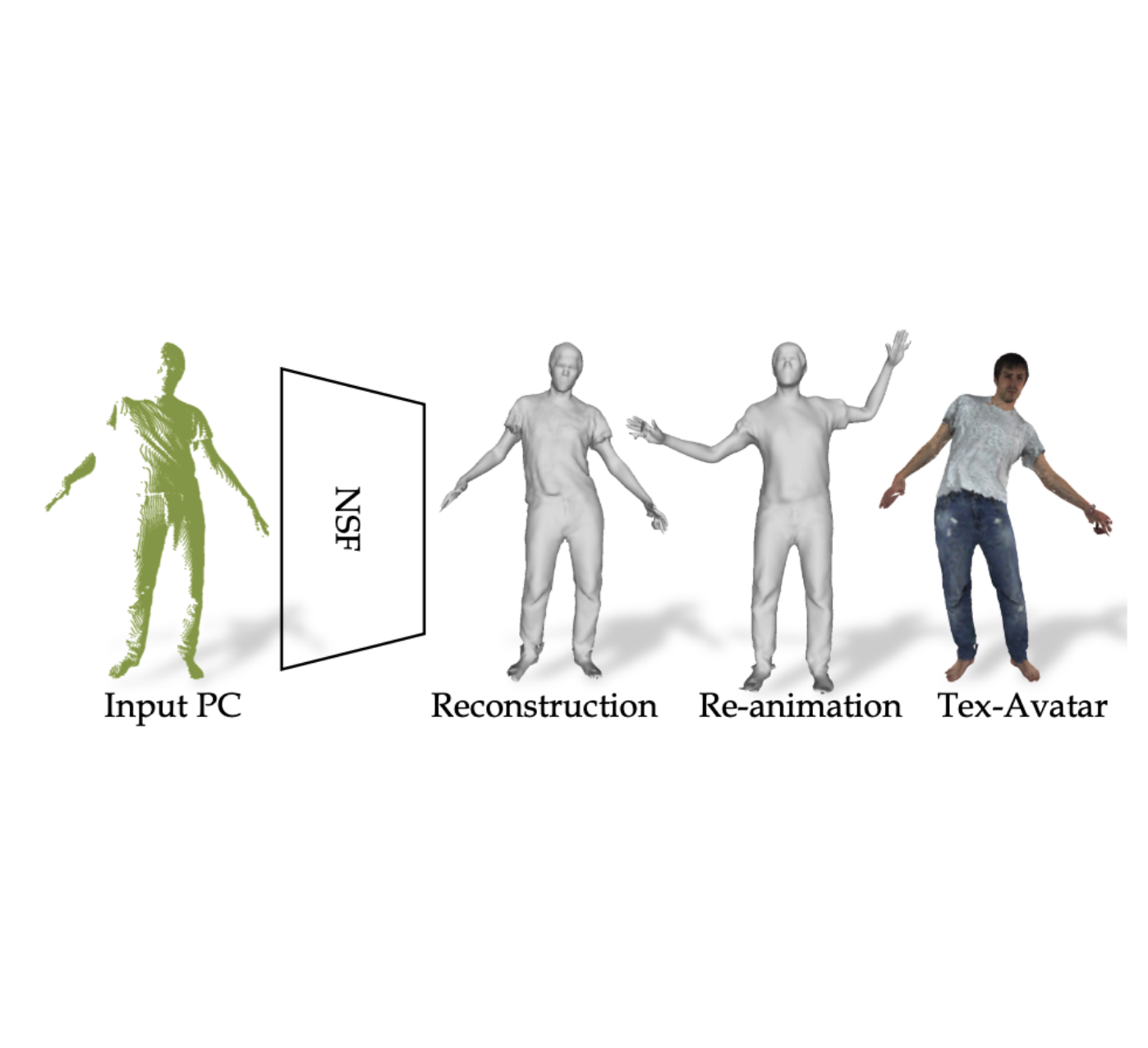

NSF: Neural Surface Fields for Human Modelling from Monocular Depth

Yuxuan Xue*,

Bharat Lal Bhatnagar*,

Riccardo Marin,

Nikolaos Sarafianos,

Yuanlu Xu,

Gerard Pons-Moll⚑,

Tony Tung⚑.

ICCV 2023, Paris

BibTex

/

Arxiv

/

Website

/

Poster

/

Video (5min)

/

Code

We propose a new approach to define a neural field on the surface for reconstructing animatable clothed human from monocular depth observation.

Our approach directly outputs coherent meshes across different poses at arbitrary resolution.

|

|

|

Controlling Text-to-Image Diffusion by Orthogonal Finetuning

Zeju Qiu*,

Weiyang Liu*,

Haiwen Feng,

Yuxuan Xue,

Yao Feng,

Zhen Liu,

Dan Zhang,

Adrian Weller,

Bernhard Schölkopf.

NeurIPS 2023, New Orleans

BibTex

/

Arxiv

/

Website

/

Code

We propose Orthogonal Finetuning (OFT), a fine-tuning approach for adapting text-to-image diffusion models to downstream tasks.

OFT can preserve hyperspherical energy to maintain the semantic generation ability of the foundation models.

|

|

|

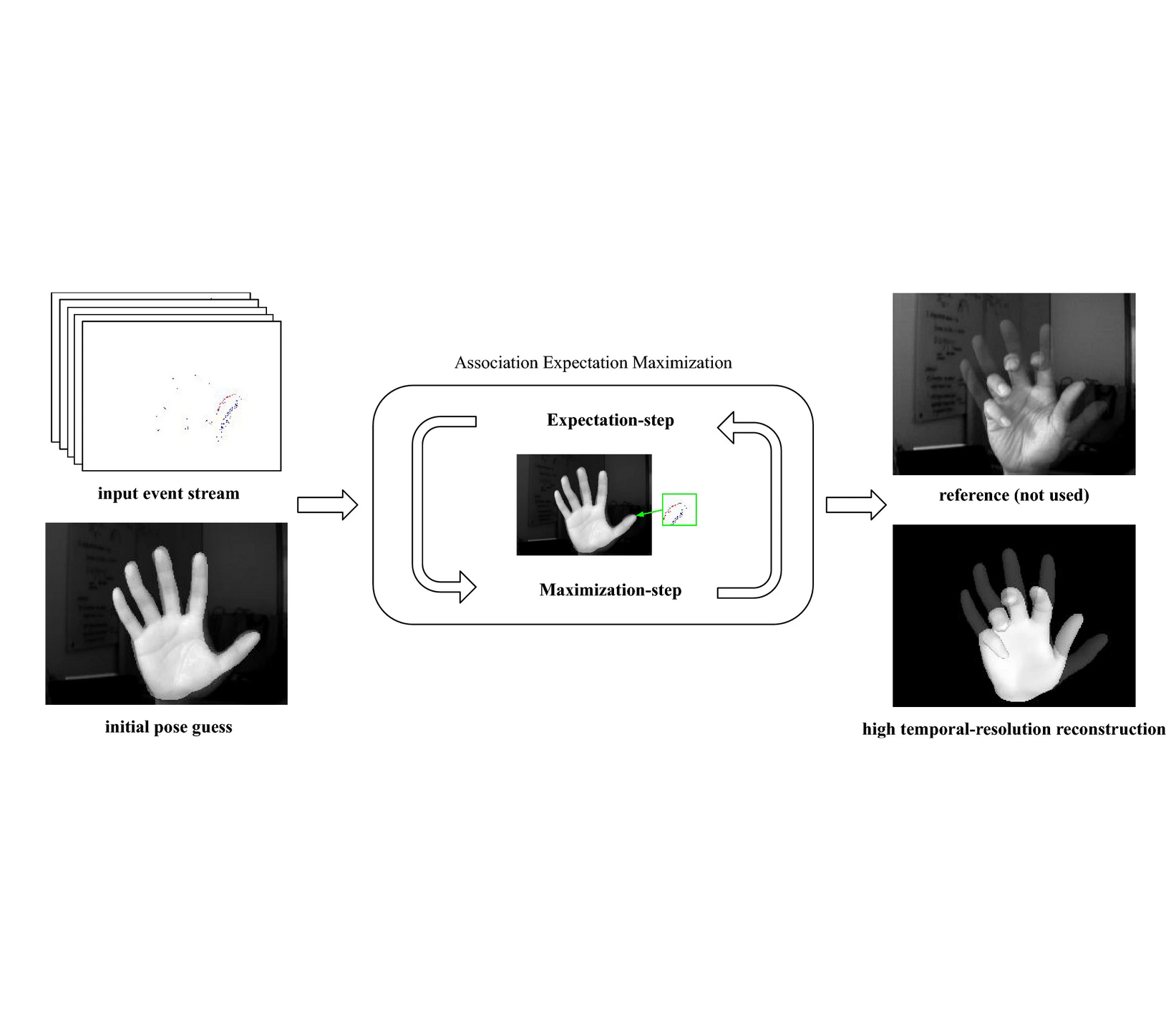

Event-based Non-Rigid Reconstruction from Contours

Yuxuan Xue,

Haolong Li,

Stefan Leutenegger,

Jörg Stückler.

BMVC 2022, London, Oral, Best Student Paper Award

BibTex

/

Arxiv

/

Website

/

Oral Presentation (11min)

/

Poster

We propose a new approach for reconstructing fast non-rigid object deformations using measurements from event-based cameras.

Our approach estimates object deformation from events at the object contour within a probabilistic optimization (EM) framework.

|

|

|

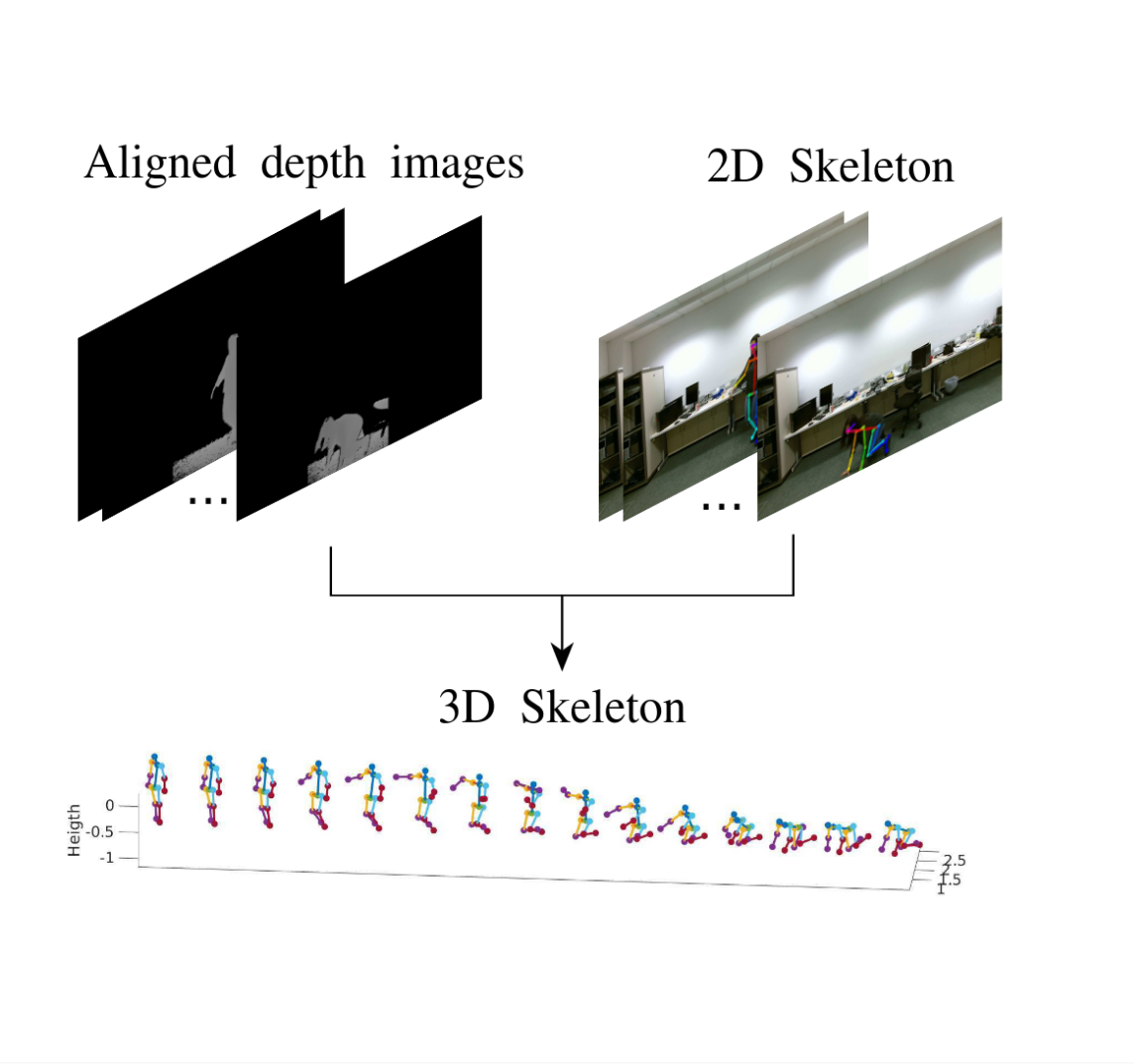

Robust event detection based on spatio-temporal latent action unit using skeletal information

Hao Xing,

Yuxuan Xue,

Mingchuan Zhou,

Darius Burschka.

IROS 2021, Prague

BibTex

/

Arxiv

We present a new method for detecting event actions from skeletal information in RGBD videos. The proposed method uses a Gradual Online Dictionary Learning algorithm to cluster and filter skeleton frames. Additionally, the method includes a latent unit temporal structure to better distinguish event actions from similar actions.

|

|

Academic Services

|

Conference Reviewer: ICCV 2023-2025, CVPR 2024-2025, ECCV 2024, NeurIPS 2024-2025, ICLR 2025, ICML 2025

Journal Reviewer: T-PAMI, TVCG, TOG(SIGGRAPH/SIGGRAPH Asia)

|

|